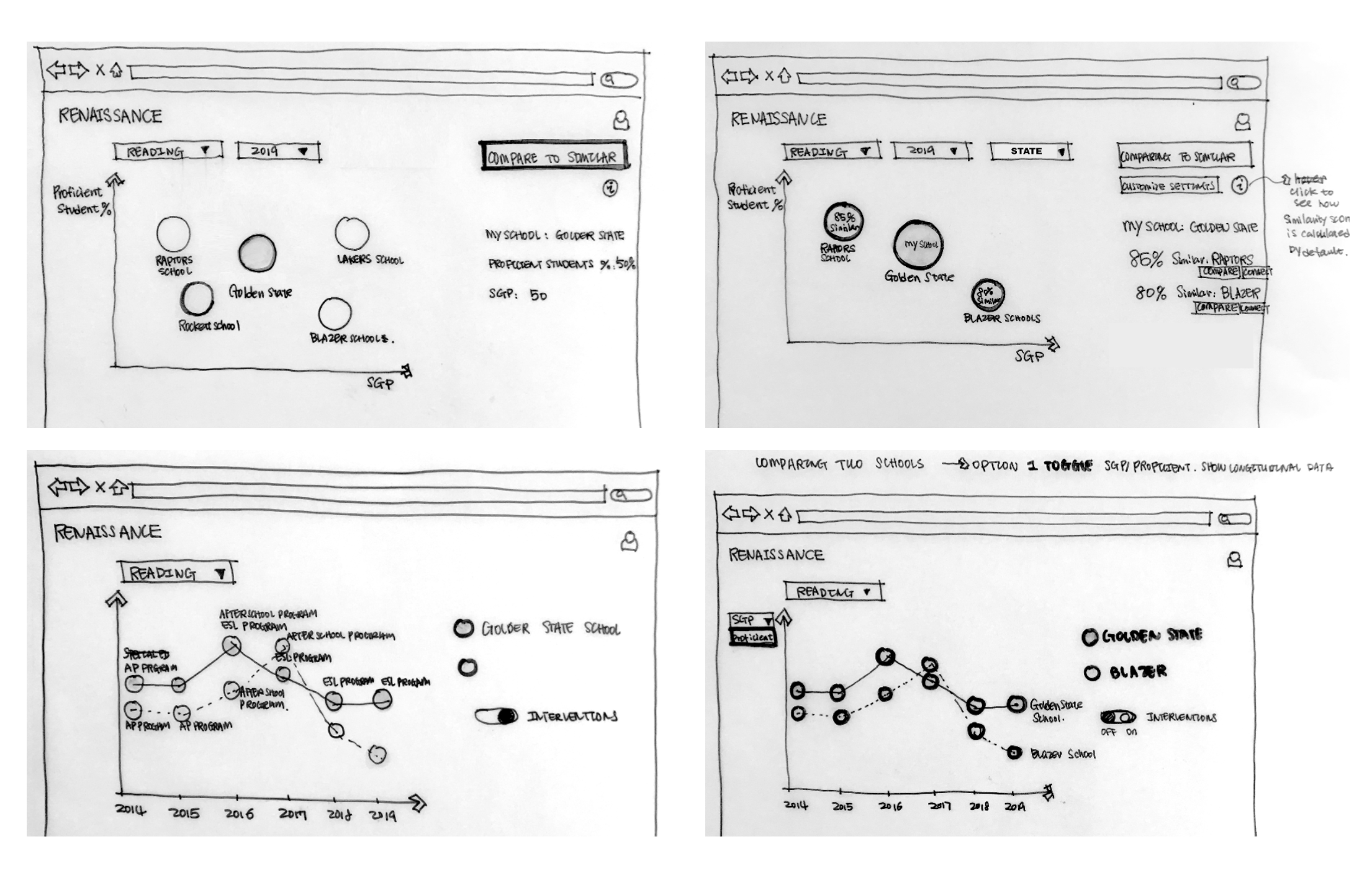

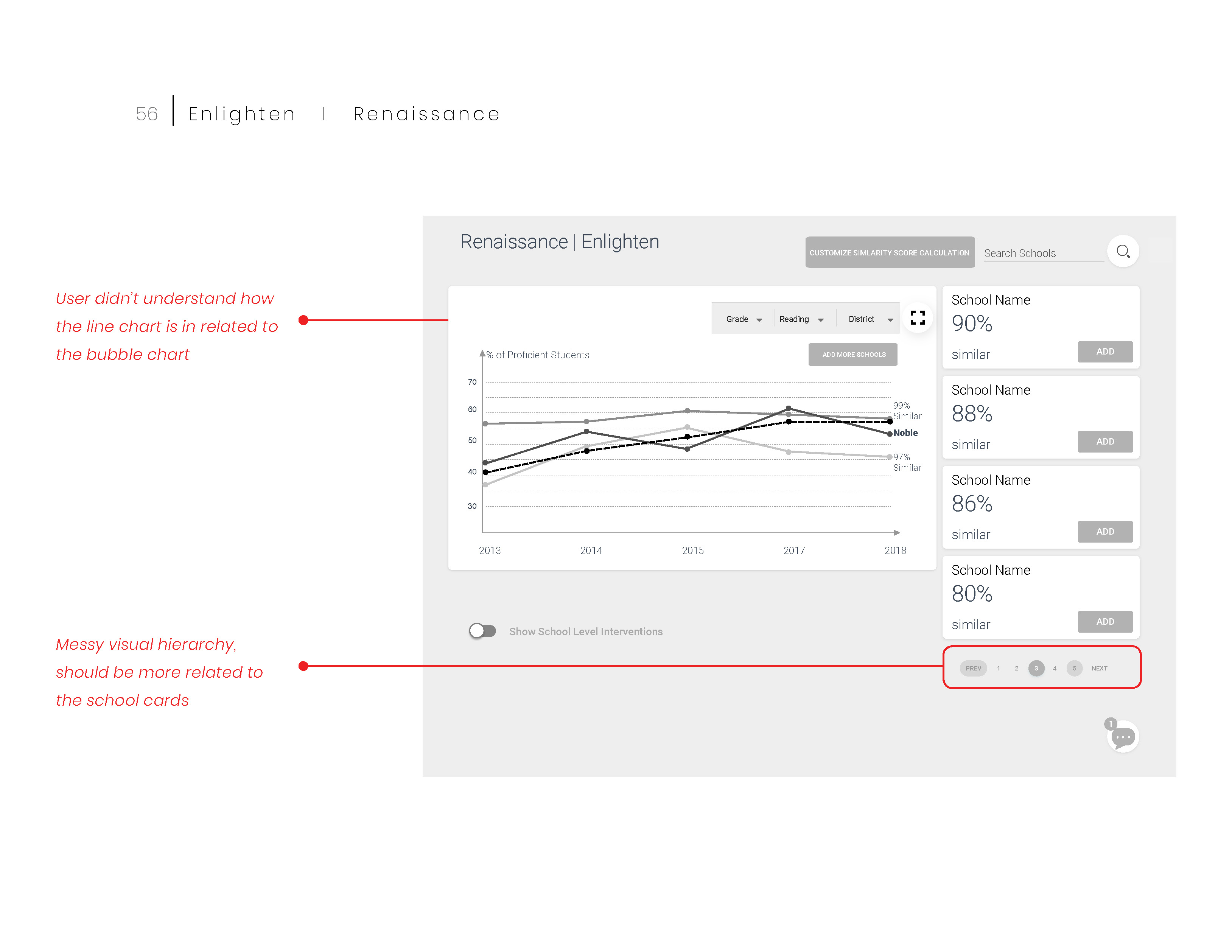

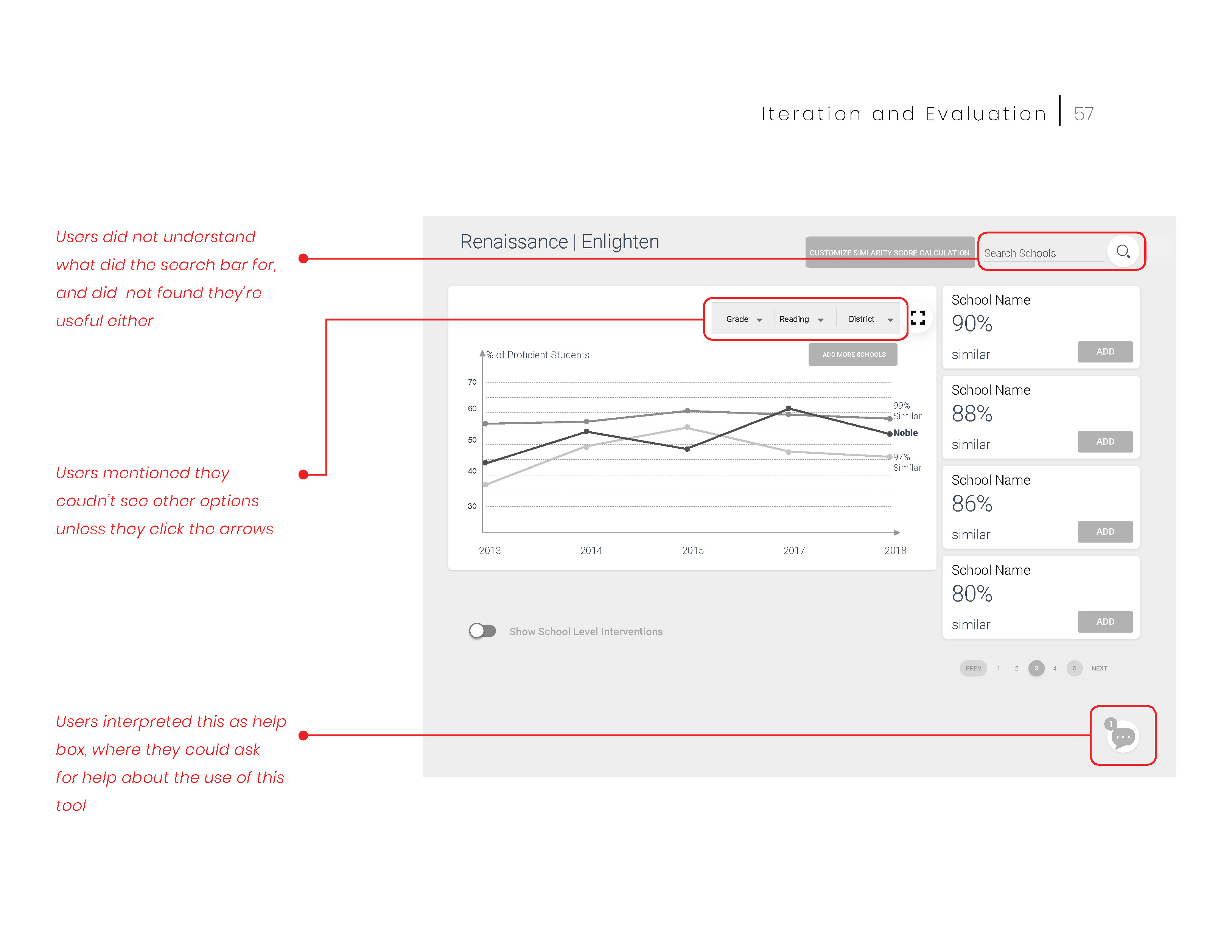

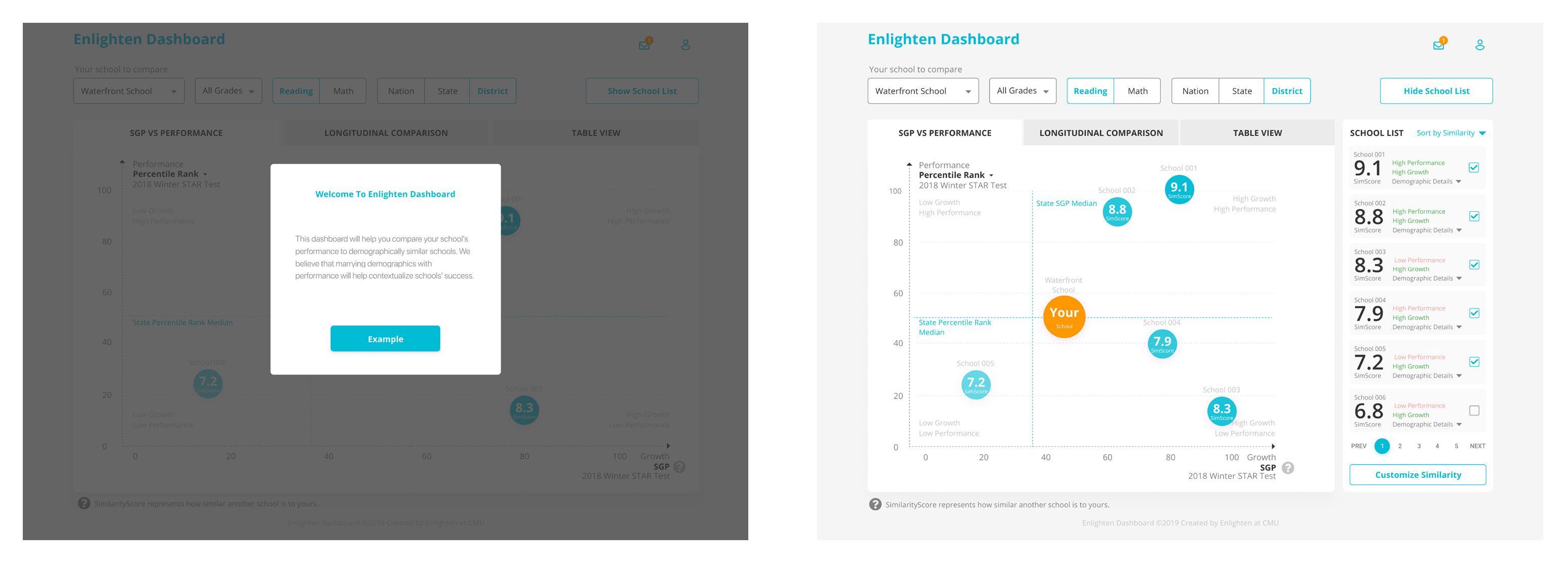

Prototyping allowed us to rapidly evaluate design alternatives so that we can improve our product usability, We started by developing several different ideas including line charts, bubble charts, as well as map diagramming to represent school geographic information.

From the early heuristic evaluations with some experts (district administrators), we have a list of feedback as follows, which guided us on generating our low-fidelity paper prototyping:

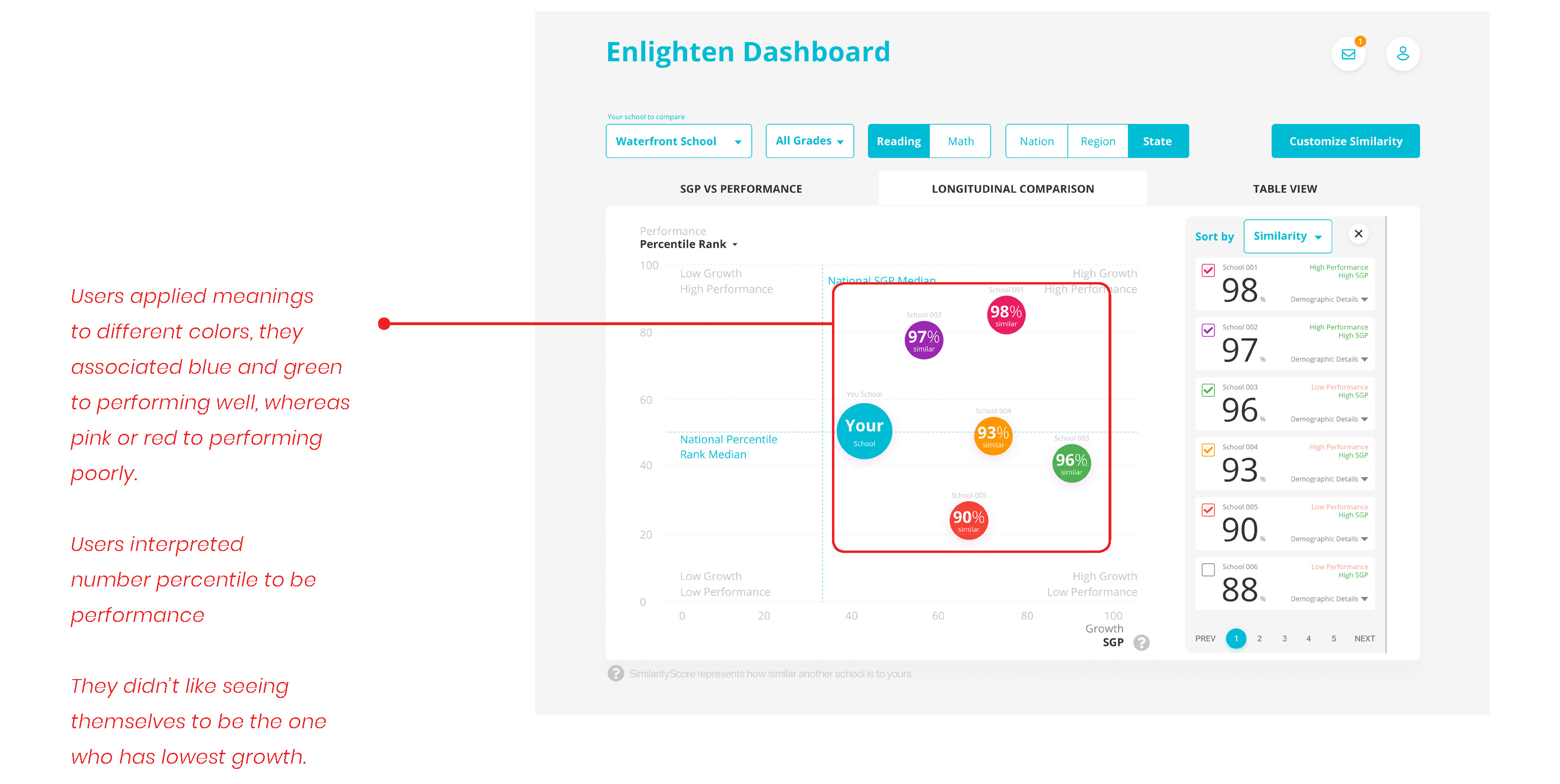

Users generally liked both bubble charts and line charts, as bubble chart is a better way to represent different schools, while line charts can provide a better opportunity to show longitudinal data.

Users think it’s nice to see the geographic information of other schools, mostly for fun, but the map diagram is not as useful as bubble charts showing schools’ performance and growth, and line charts which can represent schools’ longitudinal comparison.